Application security people, like anyone else, can make mistakes. Hasty actions and bad assumptions lead to a less complete discovery of flaws — or to outright disaster. In the worst case, A clumsy attempt to discover security problems can itself cause a breach.

Attention to good practices will mean better security analysis and safer releases. Here are some of the errors that AppSec teams should be aware of and do their best to avoid.

The first step in analyzing an application isn’t to read the code or start testing it. It’s to know in detail what it’s supposed to do. Skip that, and you don’t really understand why the developers made the design decisions they made.

From a security standpoint, the important questions are what information the app needs, how it gets it, and what it presents to the user. There might be a reason the code does things that seem excessive, or not. If it does need to grab sensitive information, knowing that can lead to identifying parts of the code that need extra attention.

Does the application use third-party services? You need to know about that in order to check whether it’s using them safely. If it’s pulling in a lot of information from them, you need to know what it actually requires.

If you’ve overlooked some functionality in the application, you can’t verify its security. It might not be a major feature; a capability designed just for administrative convenience can open up a huge hole. Make sure you know everything it’s designed to do, including capabilities that aren’t in the user manual.

To do a good job checking an application, you need to communicate with the people who created it. What design decisions did they make, and why? What concerns do they have?

Sometimes the AppSec team would love to talk with the developers but doesn’t get a chance. The organizational structure could make it difficult. The developers could be so pressed on their next project that they aren’t given time to talk with AppSec. Project managers need to make sure there are opportunities for them to talk.

It isn’t always possible to meet in person, but software tools for collaboration can fill the gap. Bug trackers, conversational software, and the occasional online conference help to keep developers and security testers aware of each other. In the process, AppSec people become more aware of how the developers work, and coders get a better understanding of security concerns.

The best way to make code secure is to write it that way from the start. Understanding how AppSec thinks helps developers to do that, and security people can recommend development tools that will help. When it’s not secure, sometimes fixing a few lines solves the problem, but sometimes a whole section really needs to be rewritten. It isn’t easy to tell developers that, but communication needs to be good enough that it’s possible.

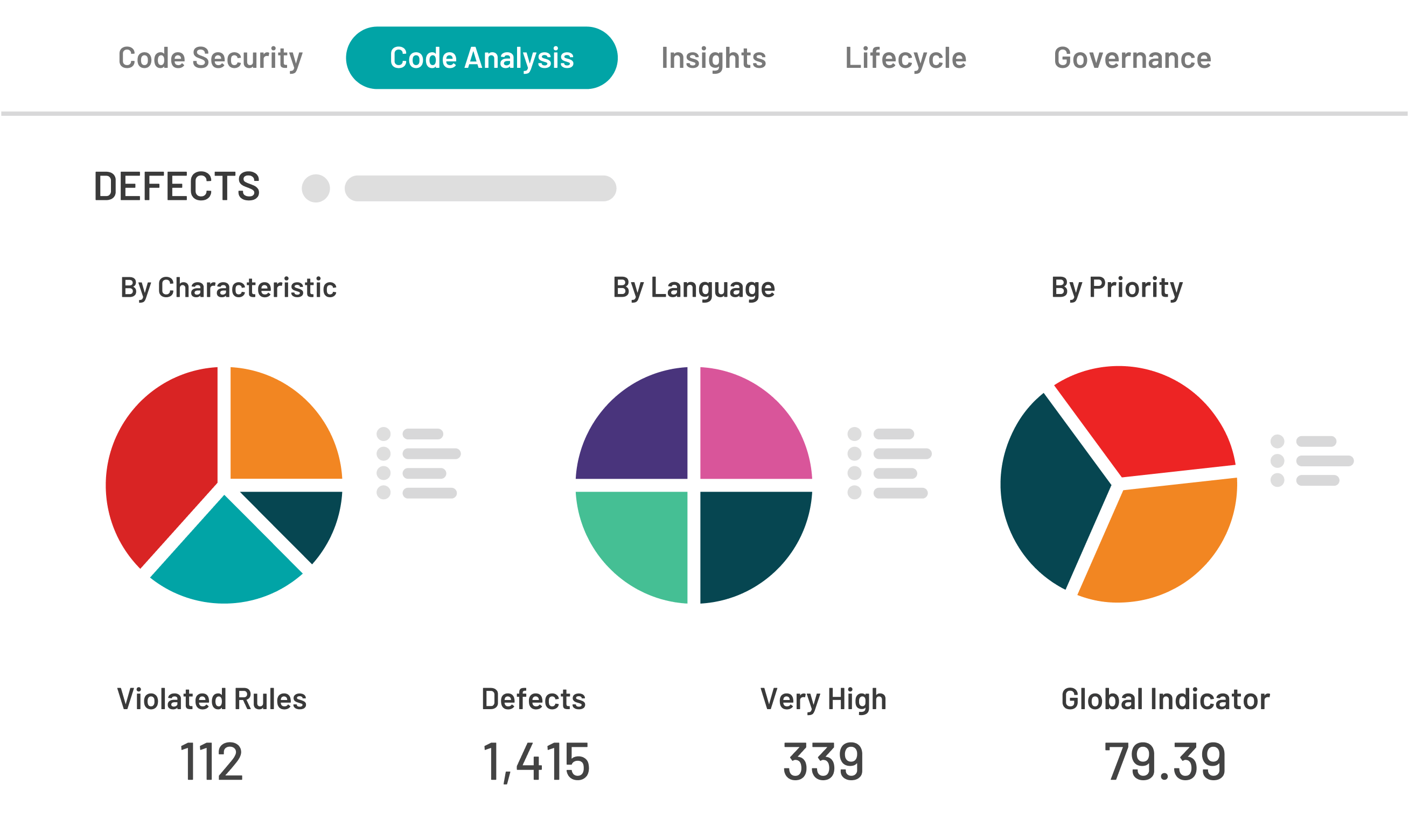

The perfect is the enemy of the good. Try to give full attention to everything, and you won’t give enough attention to the most critical things.

Some parts of the code need more attention than others. An application that crops images is less likely to cause security problems than one that processes medical records. AppSec teams need to do risk assessment so they can focus more effort where there’s a bigger chance of trouble.

Profiling the code against the OWASP Top Ten risks helps to set priorities. Authentication, database access, and operations on sensitive data are a few indicators of risk.

The overall purpose of the application gives clues about its potential risk. An application that only edits personal notes might have flaws that could leak them, and it can’t be ignored. However, one that deals with company financial data is the bigger risk and needs more attention.

A weighted list of potential risk factors is a valuable tool. Each application will have a risk score based on the total weight of the factors that apply to it. These applications will get more testing and more questions.

Testing at the wrong time or with the wrong data could cause serious trouble. Starting tests before the test environment is ready or without authorization can trigger security alerts, creating chaos till the administrators figure out it was their own testers. Doing it right goes with communication, but we’re looking at a different aspect here.

A test environment needs to be sandboxed. The point of testing is to try to do damage, but it shouldn’t be real damage. Risks from uncoordinated testing include sending live email, improper access to third-party services, interference with development work, and setting off alarms.

In a CI/CD environment, there should always be a test deployment that’s safe to attack and break. The DevOps people need to make sure that whenever it’s up and running, it’s safe to test. Coordination is still necessary so that AppSec knows when they’ll need to start a round of testing, but mistakes won’t do much damage.

The worst mistake is testing the production environment. That could leak confidential information and disrupt service. Testers always need to be clear on where they’re authorized to test.

Loose lips sink apps. People love to talk about their work, and it’s usually OK for AppSec people to talk about the kinds of issues they encounter. However, they need to avoid giving out information that could help outsiders to figure out weaknesses in specific software. Anyone who has access to code analyses and test results needs to be reminded regularly of the need to keep them confidential.

AppSec people don’t just study and test code to make it secure; they are part of the code security, and they have to guard their knowledge. How careful they need to be depends on the situation. Someone working on a missile tracking project should stop with “I do software testing.” An academic development environment is less restrictive, though anything that allowed improper access to information on students would still be bad.

Anything that would help an outsider to exploit a specific bug needs to stay confidential. Even after they’re fixed, bugs have a way of recurring, and related ones may still be lurking.

AppSec people carry a lot of responsibility. When they do their jobs well, they keep information safe and prevent downtime and financial loss. When they mess up, the consequences are sometimes serious. Being aware of common mistakes and avoiding them is a crucial part of keeping code safe.